Streaming a List from GPT

Streaming is a hugely important aspect of UX when building using LLMs like OpenAI’s GPT models because it ensures your users see results as soon as it’s available instead of waiting until the LLM has completed. But while streaming is a great UX, it adds additional challenges that need to be addressed:

- How do you handle invalid inputs and general error handling?

- How do you take a stream of text and convert it to something with more structure like a list?

- How can you validate the output of the GPT if the user gives it an input it doesn’t understand before the user sees it?

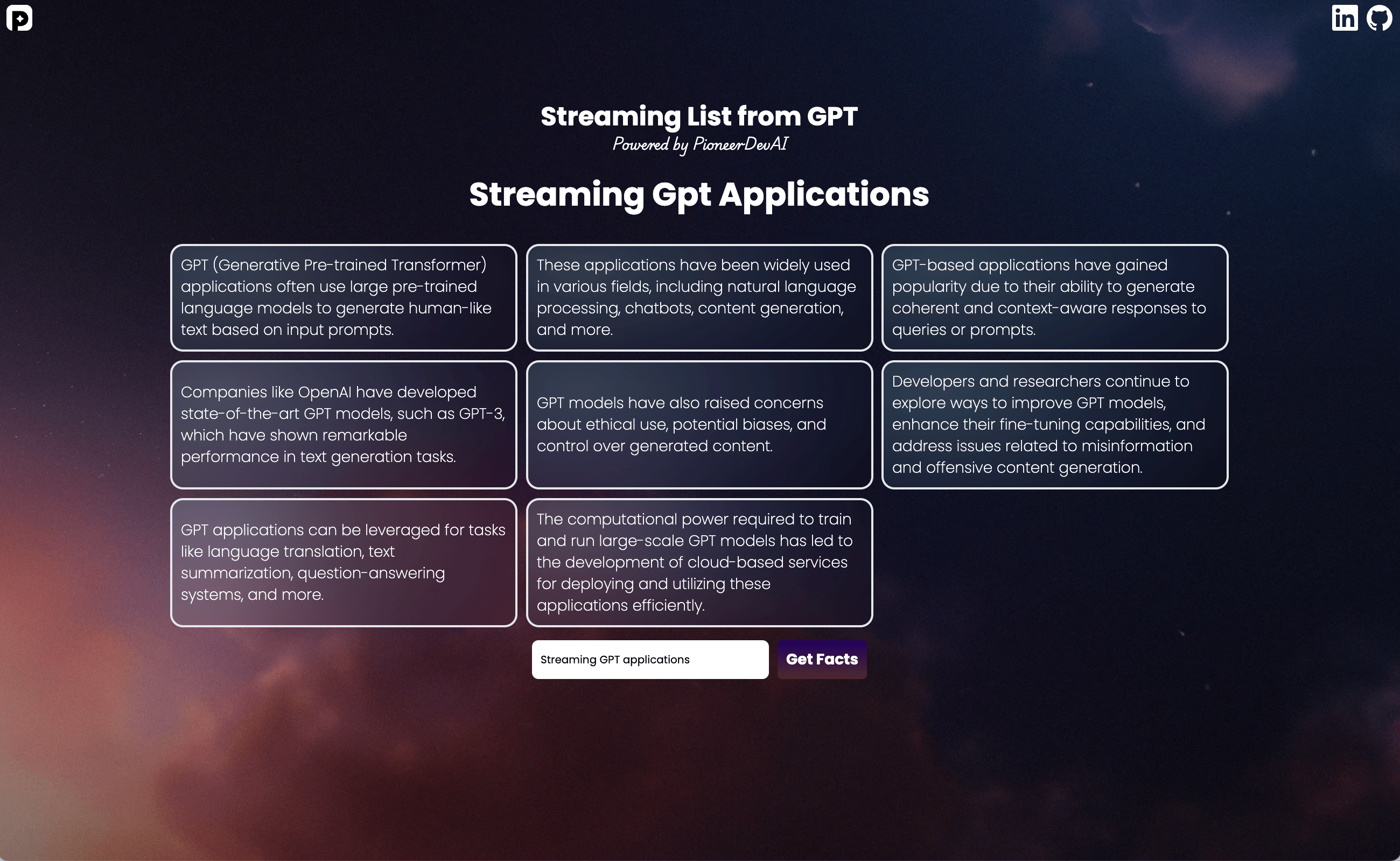

To answer some of these questions, I built a simple Remix application on where you can view the code in this GitHub link that generates a list of facts about a user-provided topic and lets the user edit those facts. Here is a screenshot of what it looks like:

Building it Out

The key innovation of this application involves processing a continuous stream of text, parsing it, and transforming it into an array of facts stored within React’s state. By organizing these facts into a list format, we extend beyond simple text or markdown rendering. Each individual fact can be dynamically rendered to the user, as demonstrated in the following code snippet:

facts.map((fact, index) => {

<div

key={index}

className="w-full bg-transparent mx-auto duration-300 delay-300"

>

<div className="fact-item-border border-2 p-2 font-extralight text-white text-sm h-full flex items-center justify-center">

{fact}

</div>

</div>;

});

This code snippet illustrates how each individual fact can be dynamically presented to the user.

So, how did I build this? Let’s start with the GPT layer and work our way back to the UI. First, I made a small wrapper function around chat completions:

/* gpt.server.ts */

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

async function* createStreamingCompletion(

messages: OpenAI.Chat.ChatCompletionMessageParam[]

) {

const stream = await openai.chat.completions.create({

model: "gpt-3.5-turbo-0125",

messages,

stream: true,

});

for await (const part of stream) {

const delta = part.choices[0]?.delta?.content || "";

yield delta;

}

}

Notice the stream: true argument which tells OpenAI that we are streaming the response. The response here is an AsyncGenerator which yields strings. I also chose to use the latest GPT-3.5-turbo model here because it is significantly less expensive than GPT-4 variants.

Next, I wrap that in a function that takes the user input topic and passes in messages to createStreamingCompletion along with the prompt.

/* gpt.server.ts */

const LIST_FACTS_SYSTEM_PROMPT = `

List facts about the input the user provides.

Each fact should be structured as a list with each item prepended with a "*"

`;

export async function generateFactCompletion(topic: string) {

const messages: OpenAI.Chat.ChatCompletionMessageParam[] = [

{ role: "system", content: LIST_FACTS_SYSTEM_PROMPT },

{ role: "user", content: topic },

];

return createStreamingCompletion(messages);

}

As shown, this would be a case of zero shot learning but you could also add a few examples to make it few shot learning . With an LLM with fewer parameters than GPT-3.5, you’d likely have to give it some examples but GPT-3.5 handles it well.

The next layer is the route layer, which looks something like this:

/* facts.stream.ts */

import { type LoaderFunctionArgs } from "@remix-run/node";

import { eventStream } from "~/event-stream.server";

import { generateFactCompletion } from "~/gpt.server";

import { Action } from "~/types";

export async function loader({ request }: LoaderFunctionArgs) {

const url = new URL(request.url);

const topic = url.searchParams.get("topic");

return eventStream(request.signal, function setup(send) {

const sendMessage = (payload: Action) => {

send({ event: "message", data: JSON.stringify(payload) });

};

if (!topic) {

// Send an error message if the topic parameter is missing

sendMessage({

action: "error",

message: "Missing topic parameter",

});

return () => {};

}

generateFacts({ sendMessage, topic });

return function clear() {};

});

}

async function generateFacts({

sendMessage,

topic,

}: {

sendMessage: (payload: Action) => void;

topic: string;

}) {

const factGenerator = generateFactCompletion(text);

for await (const chunk of await factGenerator) {

sendMessage({

action: "facts",

chunk,

});

}

sendMessage({ action: "stop" });

}

When using a ReadableStream, which is what eventStream uses, we send messages to the client. Each time we get a chunk from the factGenerator, we send a message back to the client. Once we are done, we send the stop action telling the client it can stop listening. We also send an error message if there is no topic input. If you’re curious about how eventStream works, take a look at the remix-utils package.

Next, let’s look at the client and what happens when the user presses the submit button which triggers a form submission:

/* _index.tsx */

const handleFormSubmit = async (event: React.FormEvent) => {

event.preventDefault();

const formData = new FormData(event.target as HTMLFormElement);

const topic = formData.get("topic");

const sse = new EventSource(`/facts/stream?topic=${topic}`);

sse.addEventListener("message", (event) => {

const parsedData: Action = JSON.parse(event.data);

if (parsedData.action === "facts") {

handleFactChunk(parsedData.chunk);

} else if (parsedData.action === "error") {

setError(parsedData.message);

sse.close();

} else if (parsedData.action === "stop") {

sse.close();

}

});

sse.addEventListener("error", () => {

setError("An unexpected error occurred");

sse.close();

});

};

When submitting a form, instead of doing a traditional form submit we instead take the form data and use it to stream data from /facts/stream using the EventSource class. Each time we get an event of type message, we parse the data and handle each chunk. Note there are two ways errors can happen here: ones we raise specifically and get handled with the parsedData.action === "error" related code and ones that happen at the stream level which is handled in the addEventListener for the error call function. Once we hit the stop action, we close the event source.

The final bit is how we process each chunk and create the list of facts:

/* _index.tsx */

const handleFactChunk = useCallback(

(chunk: string) => {

setFacts((prevFacts) => {

let chunkToInsert = chunk;

// if we have "*" in the chunk we are starting a new fact

if (chunk.includes("*")) {

const splitChunk = chunk.split("*");

if (splitChunk.length > 1) {

// insert the chunk before the "*" to the previous fact

if (prevFacts.length > 0) {

// update the last fact

prevFacts[prevFacts.length - 1] += splitChunk[0];

}

prevFacts.push("");

// insert the rest of the chunk as a new fact

chunkToInsert = splitChunk[1];

}

}

// only insert the chunk after we found the * to initialize it

if (prevFacts.length > 0) {

prevFacts[prevFacts.length - 1] += chunkToInsert;

setIsWaiting(false); // Set isWaiting to true when streaming

}

// trim whitespace at the front and remove newlines

return prevFacts.map((fact) => fact.trimStart().replace(/\n/g, ""));

});

},

[setFacts]

);

What this code is doing is finding every instance of a * and using that as a signal we need to append to our fact array. If we find a * in the chunk, we split the chunk and add everything before * in the last fact we have and add everything after in the upcoming chunk we are creating.

Output Validation

So how you validate the outputs of GPT actually make sense before rendering it on the screen? The way I did this is by feeding the full output of GPT back into GPT with a different prompt:

/* gpt.server.ts */

const FACT_CHECKING_SYSTEM_PROMPT = `

If the assistant is replying with something like "I'm sorry but the input you

provided doesn't contain recongizable information", return "NO" otherwise return "YES".

Only respond "NO" if it is clear the assistant doesn't have facts to provide.

Respond "YES" if the assistant has provided facts, even if they are incorrect.

`;

export async function checkCompletionIsValid(text: string) {

/**

* This function is used to check if the completion is valid.

* If it's not, we can prompt the user to provide a new input.

*/

const messages: OpenAI.Chat.ChatCompletionMessageParam[] = [

{ role: "system", content: FACT_CHECKING_SYSTEM_PROMPT },

{ role: "assistant", content: text },

];

const completion = await createSimpleCompletion(messages);

return completion !== "NO";

}

async function createSimpleCompletion(

messages: OpenAI.Chat.ChatCompletionMessageParam[]

) {

const output = await openai.chat.completions.create({

model: "gpt-3.5-turbo-0125",

messages,

});

return output.choices[0]?.message.content || "";

}

/* facts.stream.ts */

async function generateFacts({

sendMessage,

topic,

}: {

sendMessage: (payload: Action) => void;

topic: string;

}) {

const factGenerator = generateFactCompletion(topic);

let rawText = "";

for await (const chunk of await factGenerator) {

rawText += chunk;

sendMessage({

action: "facts",

chunk,

});

}

const isValid = await checkCompletionIsValid(rawText);

if (!isValid) {

sendMessage({

action: "error",

message: "Invalid input, please search another term",

});

}

sendMessage({ action: "stop" });

}

Once we have streamed back all the tokens to the client, we then check the output with another prompt that spits out a simple YES or NO response. The beauty of this solution is that if GPT gets a nonsensical input like adfasd , it won’t respond as a list but instead responds like: I'm sorry, but the input provided is not valid. Because it doesn’t have a * to denote a list, we never actually render anything in the client because it doesn’t meet our expected format even though we’ve already streamed the full response back. Genius, right???

When I first started this demo, I thought the better solution would be to just use one prompt for the fact generator like this:

const LIST_FACTS_SYSTEM_PROMPT = `

List facts about the input the user provides.

Provide up to 8 facts.

Each fact should be structured as a list with each item prepended with a "*"

Only use a "*" to start each fact.

If you cannot provide any facts or the input makes no sense, return ERROR_BAD_INPUT.

`;

That works most of the time, but with certain inputs valid inputs (example: Stephen Cefali), it gives up too easily and doesn’t spit out a response. My guess is that the extra bit of the prompt about telling GPT to return ERROR_BAD_INPUT if it gives up, that it introduces bias and causes it to give up more easily than it would otherwise.

Additional Thoughts

You might ask yourself, why did I choose to use this list format instead of JSON? There are few reasons why using a * to denote a list was easier to handle:

- When data is still streaming, the intermediate state is not valid JSON. Instead, it looks something like:

{"facts": ["Dogs are. To parse it as valid json, for streaming, you have to add the closing brackets, parentheses, and quotations yourself. - If OpenAI could output a pure list instead of an object with a list inside, it would be easier to parse. But as of the date of writing this, GPT wants the top level entity to be an object instead of a list. If you ask it to spit out facts like :

["Dogs are mammals.", "Dogs are domesticated animals."]. It starts steaming like:{"fact": "Dogs areinstead of["Dogs are.

There may be existing solutions to elegantly handle streaming JSON by automatically adding closing parentheses, and brackets but I thought my solution was good enough. If you are aware of any of them that work well, don’t hesitate to reach out and let me know!