When (and When to be Extra Careful) to Use LLMs in Software Development

Large Language Models (LLMs) are changing the way developers approach coding, documentation, and problem-solving. However, knowing when to leverage LLMs—and when to be cautious—can make all the difference in maximizing their benefits while minimizing potential risks.

In this guide, we break down the best use cases for LLMs, their strengths and weaknesses, common mistakes, and the tools available to enhance your workflow.

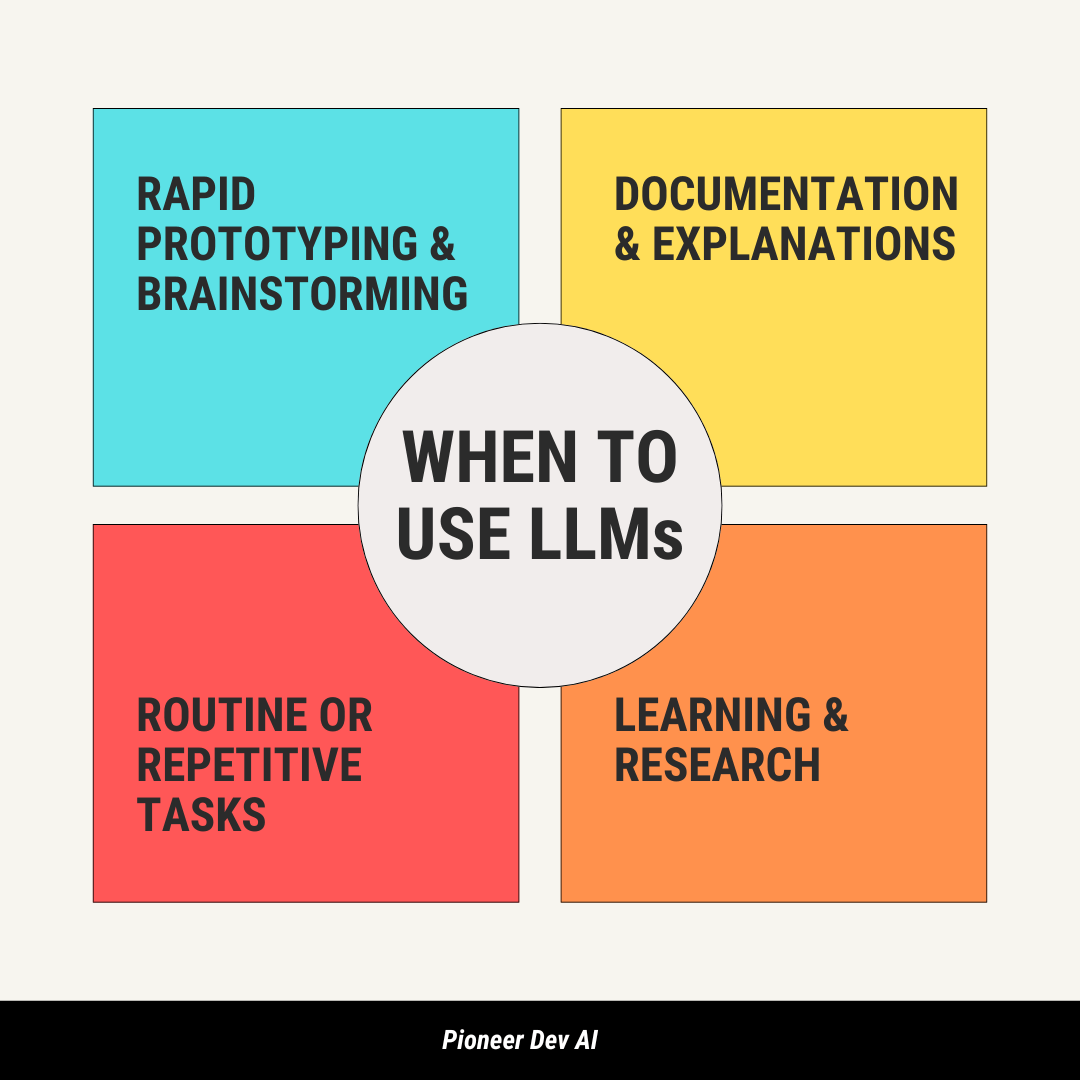

When to Use LLMs

LLMs can significantly improve developer productivity, but their strengths lie in specific areas. Here are some of the best scenarios where AI-assisted coding can be a game-changer.

1. Rapid Prototyping & Brainstorming

LLMs are excellent for generating boilerplate code, exploring multiple design ideas, and drafting initial versions of features before refining them manually.

2. Documentation & Explanations

They can generate comments, documentation, and explanations of complex code snippets, making it easier to onboard new team members and maintain clarity across projects.

3. Routine or Repetitive Tasks

From generating unit tests and refactoring simple code segments to creating configuration files, LLMs save developers time by automating mundane coding chores.

4. Learning & Research

Need to understand an unfamiliar API or framework? LLMs act as a great starting point for exploring new technologies and brainstorming potential solutions.

When to Be Careful with LLMs

Despite their capabilities, LLMs are not infallible. In some cases, relying too much on AI-generated code can lead to serious risks, errors, or inefficiencies.

1. Critical Production Code

LLMs can introduce subtle bugs that may go unnoticed. Never rely on their output for mission-critical systems without rigorous review and testing.

2. Up-to-Date or Sensitive Information

Since LLMs have knowledge cutoffs, they might suggest outdated APIs or security practices. Always cross-check their outputs with the latest official documentation.

3. Tasks Requiring Complete Context

LLM training on vast, diverse data can lead to hallucinations because the model sometimes blends conflicting or incomplete facts, resulting in fabricated details

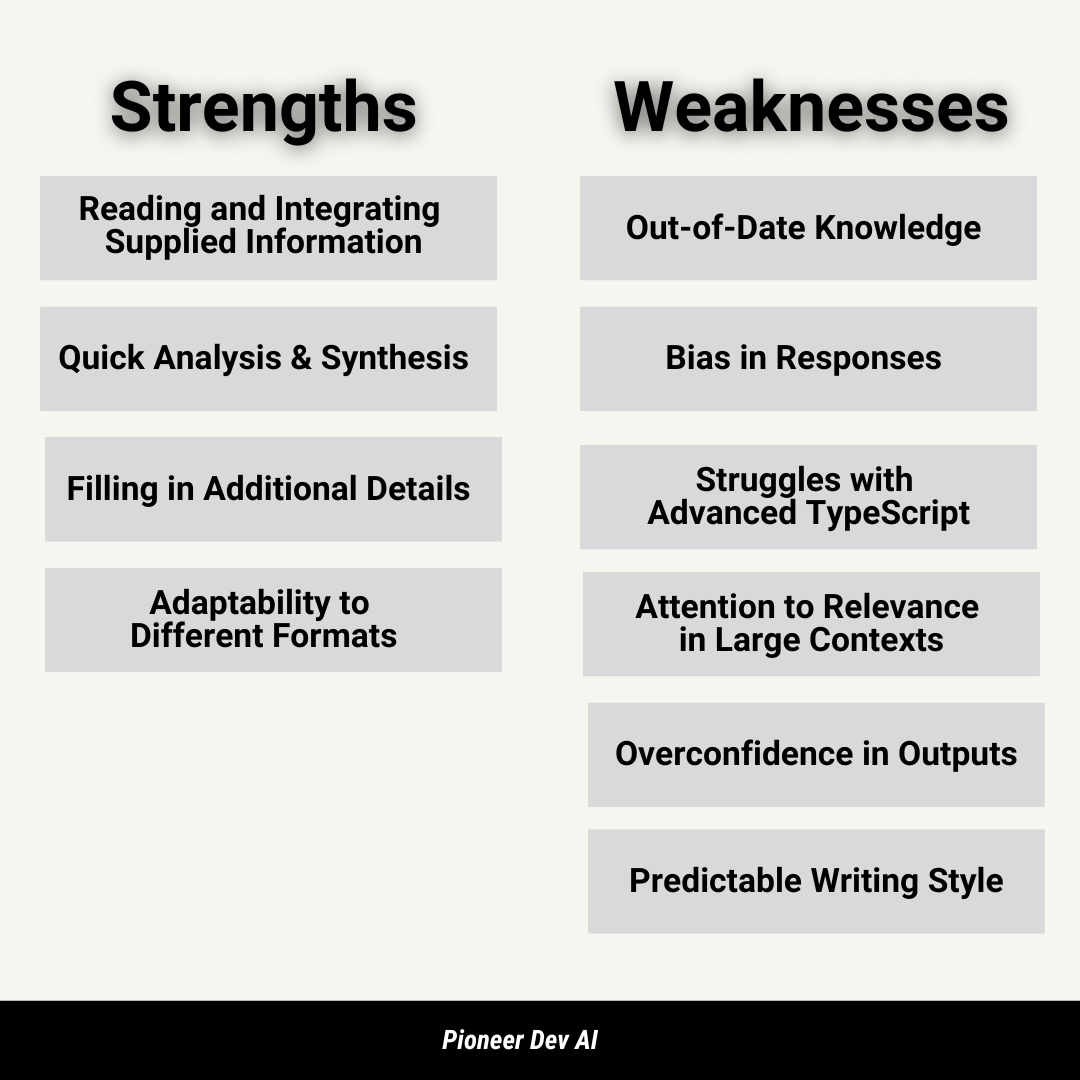

Strengths of LLMs

LLMs are powerful because they can analyze large amounts of information, synthesize insights, and adapt to different formats. Here’s a closer look at their key strengths.

1. Reading and Integrating Supplied Information

LLMs excel at synthesizing input, making them adaptable to the specifics of your prompt.

2. Quick Analysis and Synthesis

They rapidly generate insights and structure ideas, making them valuable for brainstorming and problem-solving.

3. Filling in Additional Details

With partial context, LLMs can intelligently expand or refine content, bridging gaps with relevant details.

4. Adaptability to Different Formats

Whether writing code, documentation, or prose, LLMs adjust their output style to fit the required format.

Weaknesses of LLMs

While LLMs are powerful, they come with limitations that developers must be aware of. Understanding these weaknesses can help mitigate risks and improve the quality of AI-assisted development.

1. Out-of-Date Knowledge

LLMs may suggest deprecated APIs or practices. The best way to mitigate this is by feeding up-to-date context into your prompts.

2. Bias in Responses

LLMs reflect biases in their training data, which can reinforce incorrect assumptions. Critical evaluation is necessary.

3. Struggles with Advanced TypeScript

LLMs still struggle with advanced TypeScript types, such as generics.

4. Attention to Relevance in Large Contexts

While newer models handle large inputs better, feeding in too much irrelevant data can dilute key information.

5. Overconfidence in Outputs

LLMs present responses with high certainty, even when they’re probabilistic guesses rather than factual answers.

6. Predictable Writing Style

LLMs tend to overuse common phrases and buzzwords, so manual editing is recommended for a more natural tone.

Common LLM Mistakes

Relying on ingrained knowledge

Large Language Models (LLMs) generate responses based on the data they were trained on, but their knowledge isn’t always accurate, especially when dealing with niche, specialized, or rapidly changing topics. If an LLM hasn’t seen enough reliable information about a subject, it might hallucinate (generate incorrect or entirely fictional details) instead of admitting uncertainty.

🔹 Example Mistake: You ask an LLM about a new programming framework that was just released last month. Since the model’s training data might be outdated, it could fabricate syntax, commands, or best practices that don’t actually exist.

🔹 Solution: Always provide relevant and up-to-date context in your prompt. If you need information on a specific framework, API, or industry trend, paste relevant documentation or sources into the prompt to guide the LLM toward accuracy. You can also fact-check the output against official sources before using it in critical applications.

Tip: If the LLM gives an answer that seems too confident yet unfamiliar, double-check its response instead of assuming it’s correct.

Available Tools for Developers

Want to see AI-assisted development in action? Here are some real-world examples where LLMs can enhance coding workflows.

IDE Integrations

1. Cursor

- AI-powered IDE with contextual code completions and inline suggestions.

- Integrates seamlessly into development workflows.

2. GitHub Copilot

- Works with VSCode and JetBrains to provide real-time code suggestions.

- Uses contextual clues from your codebase to offer relevant completions.

Chat-Based Interfaces

1. ChatGPT

- Provides conversational AI for debugging, code generation, and brainstorming.

- Ideal for exploring multiple approaches to a coding challenge.

2. Other LLM Chat Tools

- Several emerging assistants offer similar functionality for interactive problem-solving.

Cursor Models

1. GPT-4o

- Excels at generating robust, comprehensive code.

- Best for deep, detailed coding needs, though it may be slower.

2. Claude 3.5 Sonnet

- Highly effective for frontend tasks and natural language-driven coding interactions.

- Many online developers praise it for its high-quality code suggestions.

Examples of LLM Applications

AI-powered development tools enhance productivity by integrating directly into IDEs and chat-based interfaces. Here are some of the best tools available for coding with LLMs.

1. Analysis

- Generate price calculations based on predefined constraints.

- Example: Using models like o3-mini-pro to calculate a price from given parameters.

2. Generating Basic Code

- Task: Add a

.env.testfile. - Solution: Copy the environment variables from

config.tsand add them to the new file.

Conclusion

LLMs are powerful tools for developers when used correctly. They are the best option to use in rapid prototyping, documentation, and repetitive tasks but require careful oversight in critical applications. By leveraging the right tools and understanding their limitations, developers can use LLMs effectively to accelerate development while maintaining quality and accuracy.

Looking to harness the full potential of LLMs in your development workflow? Pioneer Dev AI specializes in building AI-driven solutions tailored to coding, automation, and full-stack SaaS applications.